Bringing Depth-supervision to Dynamic Neural Radiance Fields with Scene Flows

Claire Chen, Mani Deep Cherukuri

This is a final project for the course CSCI5980 - DeepRob at the University of Minnesota

Overview Video

Architecture

The objective of our work is to investigate the impact of depth-supervised loss on both training time and performance in generation of 3D realistic models using NeRF. While the Depth-supervised NeRF paper demonstrated the effectiveness of depth-supervised loss in reducing training time for static scenes, our study aims to evaluate whether this approach can be applied to dynamic scenes. To achieve this goal, we incorporated the DS-loss into the Neural Scene Flow Fields model and conducted experiments with different loss combinations.

Static View Synthesis

2 view input

Using just 2 view inputs and the sparse 3D points generated by the COLMAP, the Depth Supervised model is able to achieve training faster by a magnitude of 1 without compromising the quality of the render.

5 view input on Gopher Data

The above is the results on the Gopher data collected by team 1. Unlike basic NeRF which just uses the input views, we also make use of the sparse 3d points generated during the COLMAP run for calculating the depth supervision loss.

Dynamic View Synthesis

No depth loss

Depth loss only

Single View Depth loss

Mix (depth loss + single view depth)

As the depth supervised loss is incorporated in the total loss, we observe a significant improvement in the depth maps on the right.

Performance Evaluation

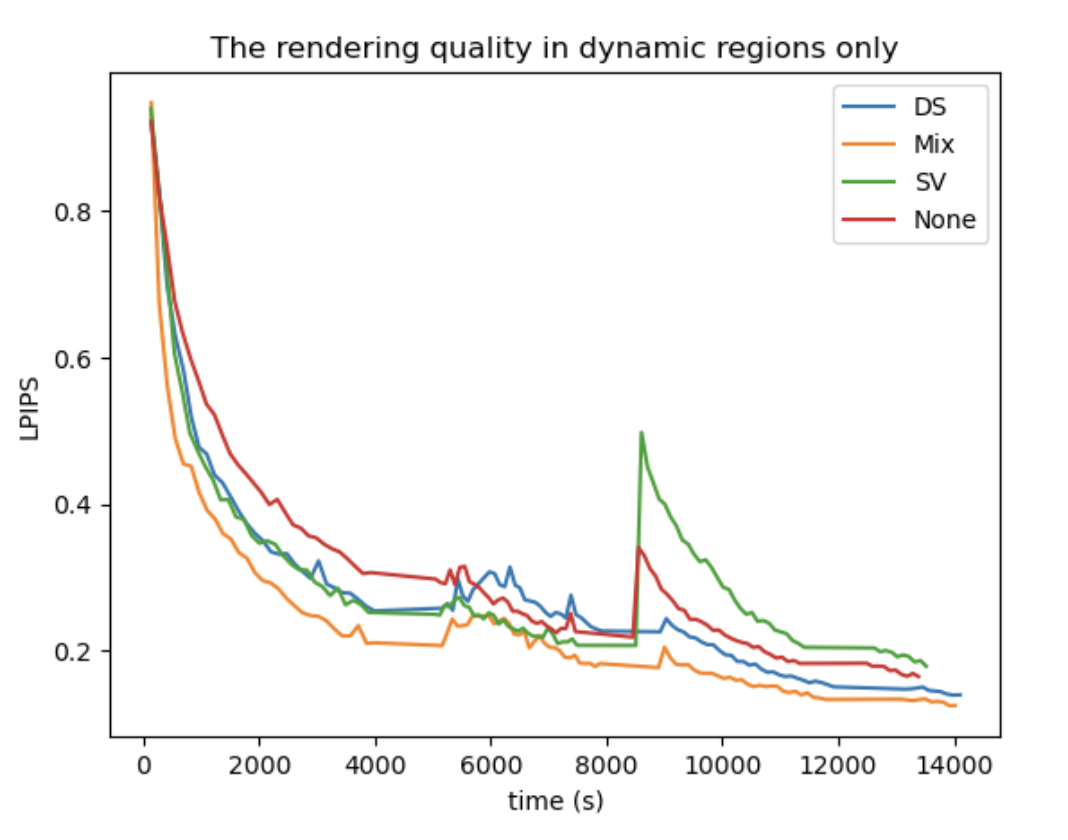

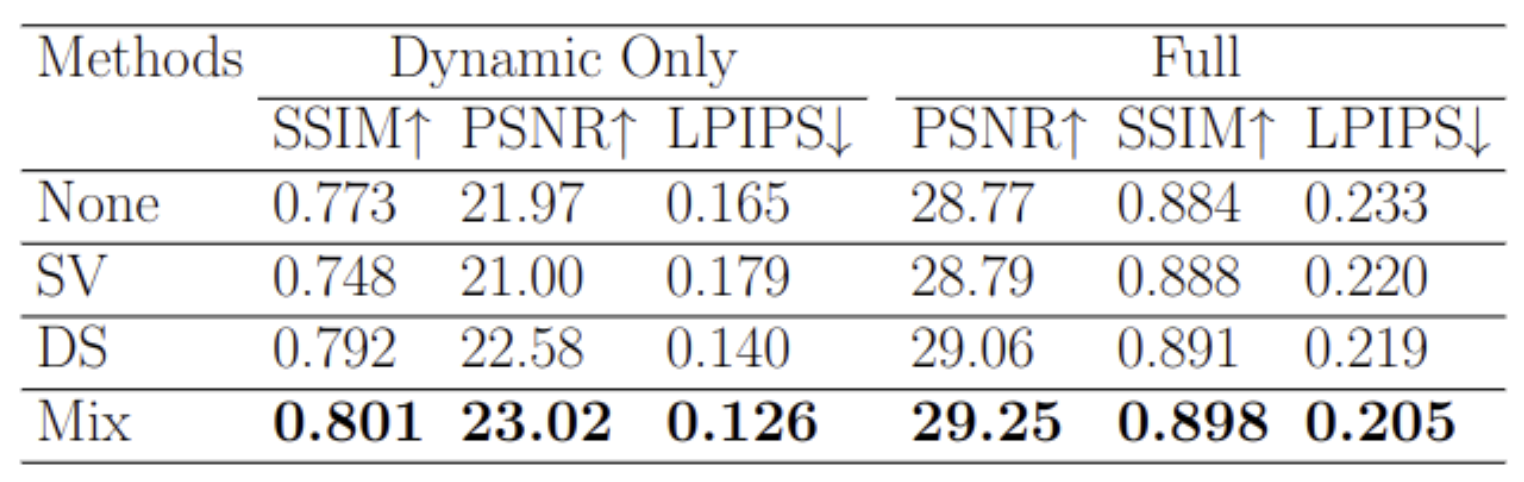

Here, we compare the performance v/s time of all the models used for rendering a dynamic scene. We can observe that the loss for the mix model which incorporates the Single view depth + depth supervision converges faster when compared to other loss functions.

The LPIPS score for the mix model, which is the lowest, indicates a considerable improvement in the quality of the rendered output, as shown in the above table, demonstrating how integrating depth truly enhances rendering performance.